9 RAP

9.1 Principles

Reproducible Analytical Pipelines (RAPs) have evolved from the UK Govenment’s Analysis Function and their 2022 Reproducible Analytical Pipelines (RAP) strategy sets out the following principles.

"RAPs are automated statistical and analytical processes. They incorporate elements of software engineering best practice to ensure that the pipelines are reproducible, auditable, efficient, and high quality.

RAPs increase the efficiency of statistical and analytical processes, delivering value. Reproducibility and auditability increase trust in the statistics. The pipelines are easier to quality assure than manual processes, leading to higher quality.

A RAP will:

improve the quality of the analysis

increase trust in the analysis by producers, their managers and users

create a more efficient process

improve business continuity and knowledge management

To achieve these benefits, at a minimum a RAP must:

minimise manual steps, for example copy-paste, point-click, or drag-drop operation; where it is necessary to include a manual step in the process this must be documented as described in the following bullet points

be built using open-source software, which is available to anyone, preferably R or Python

deepen technical and quality assurance processes with peer review to ensure that the process is reproducible and that the requirements described in the following bullet points have been met

guarantee an audit trail using version control software, preferably Git

be open to anyone – this can be allowed most easily using file and code sharing platforms

follow existing good practice for quality assurance

contain well-commented code and have documentation embedded and version controlled within the product, rather than saved elsewhere

Notes:

There may be restrictions, such as access to databases, which stop analysis producers building a RAP for their full end-to-end process. In this case, the previously described requirements apply to the selected part of the process.

There may be restrictions, such as sensitive or confidential content, which stop analysis producers from sharing their RAP publicly. In this case, it may be possible to share the RAP within a department or organisation instead.

It is recommended that where possible a RAP should be built collaboratively – this will improve the quality of the final product and helps to allow knowledge sharing.

There is no specific tool that is required to build a RAP, but both R and Python provide the power and flexibility to carry out end-to-end analytical processes, from data source to final presentation.

Once the minimum RAP has been implemented, statisticians and analysts should attempt to further develop their pipeline using:

functions or code modularity

unit testing of functions

error handling for functions

documentation of functions

packaging

code style

input data validation

logging of data and the analysis

continuous integration

dependency management"

9.2 Strategy goals

The Government’s RAP strategy includes three goals:

tools – ensure that analysts have the tools they need to implement RAP principles.

capability – give analysts the guidance, support and learning to be confident implementing the RAPs.

culture – create a culture of robust analysis where the RAP principles are the default for analysis, leaders engage with managing analysis as software, and users of analysis understand why this is important.

9.3 Platforms for Reproducible Analysis

The two lists below are from the Government’s RAP strategy, to which we’ve added notes on current SCC status.

9.3.1 For RAPs that meet the minimum criteria

version control software, that is, git - BI Team laptops & OSCAR desktop

open-source programming languages and flexibility to add more (Python, R, Julia, JavaScript, C++, Java/Scala etc.) - R & Python on BI Team laptops, R on OSCAR desktop

package and environment managers for each of the available languages - should have tested renv for R by now, if extending Council GIS the version of ArcGIS Pro we use has restrictive Conda use e.g. no virtual environments

packages and libraries for open-source programming languages, either through direct access to well-known libraries, for example, npm, PyPI, CRAN, or through a proxy repository system, for example, Artifactory - BI Team laptops & OSCAR desktop

individual storage, for example, home directory - SCC OneDrive

shared storage, for example, s3, cloud storage, with fine-grained access control, accessible programmatically - don’t have, should we look at Azure Blob storage?

integrated development environments suitable for the available languages – RStudio for R, Visual Studio Code for Python and so on - BI Team laptops & OSCAR desktop

In summary, with the exception of shared storage e.g. Azure Blob storage, some SCC data analysts have access to all of these minimum criteria for RAPs.

9.3.2 For further development of RAPs

source control platforms, for example, GitHub, GitLab or BitBucket - trialing GitHub organisation (github.com/scc-pi)

continuous integration tools, for example, GitHub Actions, GitLab CI, Travis CI, Jenkins, Concourse - trialing GitHub Actions but CI limited by the current GitHub security considerations (GitHub security & data protection)

make-like tools for reproducible workflows, for example, make - identified the potential value of targets r package, but not tested yet

relational database management software, for example, PostgreSQL, that is available to users - corporate solution being investigated and there’s some DBMS access available via OSCAR desktop

orchestration systems for pipelines and workflows, for example, airflow, NiFi - don’t have

internal-facing servers to host html-rendered documentation - request denied

external-facing servers with authentication to host end-products such as web applications or APIs - don’t have

big data tool, for example, Presto or Athena, Spark, dask and so on, or access to large memory capability - don’t have

reproducible infrastructure and containers, for example, docker - docker on BI Team laptops but not tested yet

In summary, SCC data analysts don’t have most of the platforms needed for further development of RAPs. Docker and targets are accessible and could be trialed. GitHub is being trialed and purchasing would ease some current security restrictions and could include the CI and server platform items. No consideration yet of orchestration systems or big data tools.

9.4 How could RAP sit within SCC data analysis?

NB This sub-section is currently from the perspective of one individual. It needs a broader perspective, particularly from more experienced colleagues in PAS and data analysis team managers.

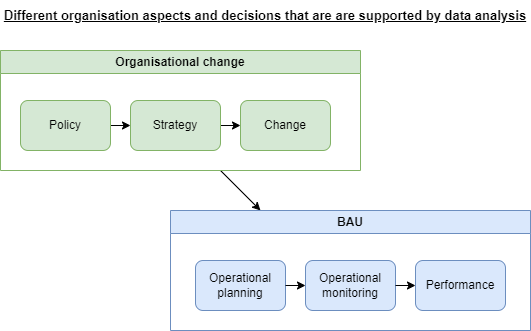

Sheffield City Council has aspirations to be data-driven, to make better evidence based decisions. Data analysis can support organisational change decisions on, for example, Planning Policy, Corporate Strategy, and changes to the operating model for Early Help in Children’s Services. Data analysis can also support continuous improvement under BAU (Business As Usual), helping managers understand how best to deploy their resources over the next month, highlighting issues that arose last week, and reporting performance metrics to senior managers.

The efficiencies from the automation aspect of RAPs may suit more repetitive (e.g. monthly) data analysis outputs that support BAU. The quality assurance aspect of RAPs may be more important for complex data analysis that supports major organisation change decisions. In practice, team culture and individual capability may have more to do with adopting RAP than the type or purpose of data analysis output. For example, an individual may be more likely to pursue RAP if they have a software development background, and they work in the BI Team that was initially experimental in nature and doesn’t have a large catalogue of BAU output and well established quality assurance procedures.

Elements of RAP have been used by SCC data analysts, but a full RAP has not yet been developed. The Council could pilot one or two RAPs. These should be done collaboratively i.e. with members of different data analysis teams. One could be re-engineering an existing process, another could be a new piece of data analysis.

9.5 MLOPs

Machine Learning Operations (MLOPs) is more specifically about automating the building, training, deployment, maintenance, and further development of models. RAPs cover a broader range of data analysis output.

9.6 Further resources

9.6.1 Guidance

Reproducible Analytical Pipelines (RAP), Government Analysis Function

Reproducible Analytical Pipelines (RAP) strategy, Government Analysis Function

RAP Community of Practice, NHS Digital

Choose tools and infrastructure to make better use of your data, Cabinet Office

9.6.2 Training

Introduction to Reproducible Analytical Pipelines (RAP), free online ONS Data Science Campus Learning Hub course (hub now available to local government analysts)

Reproducible Analytical Pipelines (RAP) using R, 7hr free online Udemy course by Matthew Gregory

Reproducible Analytical Pipeline learning materials, Data Science Campus

9.6.3 Books

RAP Companion, by Matthew Gregory & Matthew Upson

Building reproducible analytical pipelines with R, by Bruno Rodrigues

The Turing Way: Handbook to Reproducible, Ethical and Collaborative Data Science, by the Turing Way Community

Open Source MLOPs, by Matthew Upson (release August 2023)

Quality assurance of code for analysis and research - the Quack book, ONS

9.6.4 Blogs

Why we’re getting our data teams to RAP,

Transforming the process of producing official statistics, Civil Service

Econometrics and Free Software, Bruno Rodrigues

Data Version Control for Reproducible Analytical Pipelines, Matthew Upson

9.6.7 GitHub templates

rapR-package-template, Craig Shenton

NHSDigital/rap-package-template

govcookiecutter: A template for data science projects